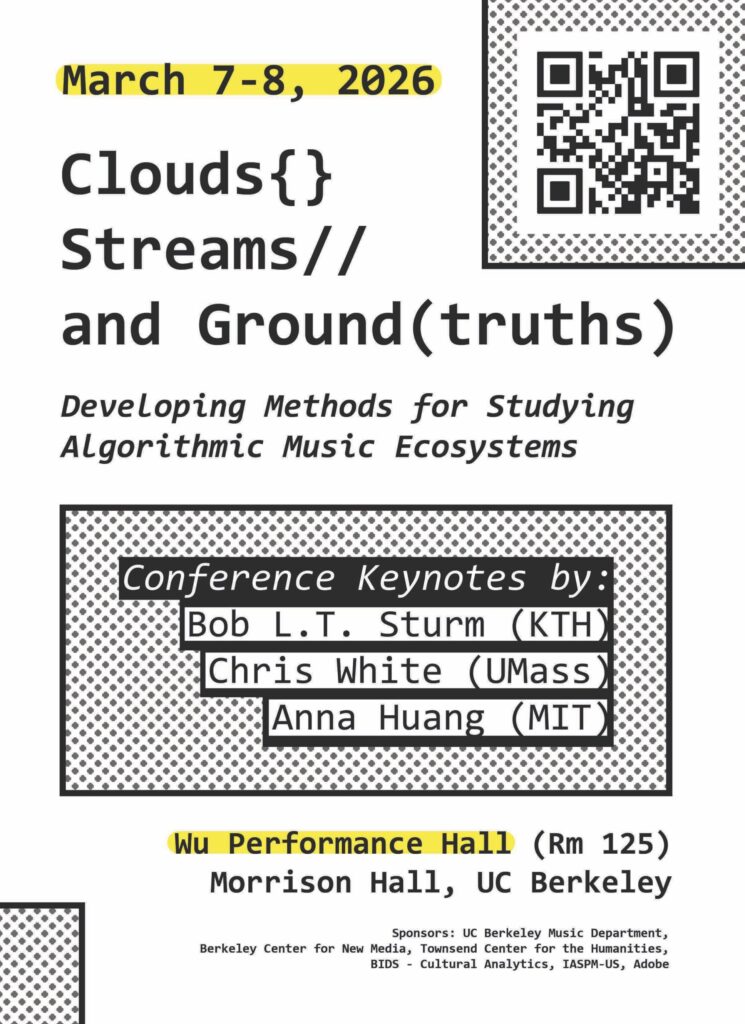

Clouds {} Streams // and Ground (truths)

Developing Methods for Studying Algorithmic Music Ecosystems

March 7-8, 2026

University of California, Berkeley

Wu Performance Hall (Room 125)

Morrison Hall (Google Maps)

Agenda

Clouds {} Streams // and Ground (truths): Developing Methods for Studying Algorithmic Music Ecosystems

“Fail fast, fail forward” echoes throughout Silicon Valley. The phrase validates (and often financially rewards) companies who pursue rapid technological development over more considered approaches. But this future-oriented vision has also made these systems difficult to study: like the metaphorical “stream,” they are constantly in flux. One consequence: digital music, streaming platforms, and cloud infrastructures have been around for decades, yet scholars lack a consensus on how to study these objects. Access to the past is often foreclosed by relentless pursuits of digital futures.

This conference brings together an interdisciplinary group of scholars, researchers in the music industry, and legal practitioners to discuss the challenges of studying digital systems and develop ways to make them more knowable — explicitly articulating what we can know about these systems and how we can know it. The conference will feature keynotes by Bob Sturm (KTH Royal Technical University), Anna Huang (MIT), and Chris White (UMass, Amherst), industry and legal roundtables, and panels that discuss law, labor, political economy, as well as historical, computational, ethnographic, and theoretical methods.

This is an in-person event only.

Saturday, March 7, 2026

8:30a

Opening Remarks

8:40a

Opening Keynote: Bob Sturm, On The Need For A Philosophy of Music Information Retrieval

<break>

10:00a

Session 1: History as Method

Max Alt and Jose Galvez, Listening with Numbers

Edmund Mendelssohn: Spectralism as Simulation: On the Modernist Prehistory of Artificial Intelligence

Rubina Mazurka Hovhannisyan: In Defense of the Human in Posthumanism: Reassessing Girolamo Diruta’s 1593 organ treatise Il Transilvano

11:30a

Lunch (101 Morrison Hall, provided for speakers)

12:30p

Industry Roundtable

Oriol Nieto (Adobe)

Maya Ackerman (WaveAI)

Jesse Engels (Google)

Moderator: Alex Krentsel (UC Berkeley, Google)

2:00p

Session 2: Computational Methods

Mattia Zanotti: Musical(?) Data: Digital Methods and Popular Music Studies

Annie Chu: Listening in the Age of the Algorithm: Bridging Musicology and HCI Methodologies

Dan Faltesek: Evaluating Social Network Analysis Methods for Music Recommendation Systems

<break>

4:00p

Closing Keynote: Anna Huang

Sunday, March 8

8:30a

Session 3: Ethnographic Methods

Nicholas Shea, Fretboard Networks: Alternative models of genre, popularity, and syntax developed on digital artifacts sourced from the ultimate-guitar.com guitarist community

Amy Cheatle, What Algorithms Can’t Hear: Atmospheres and Afterglows in the Sonic Worlds of the Violin

Travis Lloyd, Independent Musicians’ Experiences of Algorithmic Music Ecosystems

<break>

10:30a

Session 4: Theoretical Methods

Teresa Turnage, Fractal Echoes: Apophenia, Paranoia, and Recursive Listening

Emerson Johnston, Hype as Method: Studying the Political Economy

Robin James, Atmospheric Orientations: Phenomenology as a method for studying algorithmic music ecosystems and their ethics

12:00p

Lunch (101 Morrison Hall, provided for speakers)

1:00p

Legal Roundtable

Tal Niv (UC San Francisco Law)

Britt Lovejoy (Latham & Watkins)

Moderator: Carla Shapreau (UC Berkeley Law)

<break>

2:30p

Session 5: Labor, Law, and Political Economy

Vanessa Borsan, Musician’s Recurring Concerns Over Technology: From Mechanical Reproduction in Interwar Yugoslavia to Generative Production in Contemporary Slovenia

Matthew Blackmar, Attribution Engines: The Twilight of Forensic Musicology?

Jeremy Morris, Fake Artists, Fake Listeners, Real Work: Generative AI and Streaming Services

4:00p

Closing Keynote: Chris White, A Brief History of AI-ify-ing music, 794 C.E.-present

Keynote Speakers

Bob L.T. Sturm (KTH)

I am the PI of the MUSAiC project (ERC-2019-COG No. 864189). Before becoming an Associate Professor of Computer Science at KTH in 2018, I was a Lecturer in Digital Media at the Centre for Digital Music, School of Electronic Engineering and Computer Science, Queen Mary University of London. Before joining QMUL in 2014, I was a lektor at the Department of Architecture, Design and Media Technology, Aalborg University Copenhagen. Before joining AAU in 2010, I was a postdoc at “Lutheries – Acoustique – Musique” (LAM) de l’Institut Jean le Rond d’Alembert, Paris 6. I received my PhD in Electrical and Computer Engineering in 2009 from the University of California, Santa Barbara.

Keywords: machine listening for music and audio, evaluation, music modeling and generation, ethics of AI, machine learning for music, digital signal processing for sound and music, folk music, Irish traditional music, English Morris dancing, Scandinavian folk music, accordion, caricature, painting

Chris White (UMass)

Christopher White is Associate Professor of Music Theory at the University of Massachusetts Amherst, having previously taught at The University of North Carolina at Greensboro and Harvard University. Chris received his PhD from Yale University and has also attended Queens College–CUNY, and Oberlin College Conservatory of Music.

Chris’s research uses big data and computational techniques to study how we hear and write music. He has published widely in such venues as Music Perception, Music Theory Online, and Music Theory Spectrum. His first book The Music in The Data (2022, Routledge) investigates how computer-aided research techniques can hone to how we think about music’s structure and expressive content. His second book, The AI Music Problem (2025, Routledge) outlines ways that music poses difficulties for contemporary generative AI. He also had published in popular press venues— including Slate, The Daily Beast, and The Chicago Tribune—on a wide range of topics, including music analysis, computational modeling, and artificial intelligence. Chris also remains an avid organist, actively performing and collaborating across New England.

(Photo by Eric Berlin)

Anna Huang (MIT)

In Fall 2024, I started a faculty position at Massachusetts Institute of Technology (MIT), with a shared position between Electrical Engineering and Computer Science (EECS) and Music and Theater Arts (MTA). For the past 8 years, I have been a researcher at Magenta in Google Brain and then Google DeepMind, working on generative models and interfaces to support human-AI partnerships in music making.

I am the creator of the ML model Coconet that powered Google’s first AI Doodle, the Bach Doodle. In two days, Coconet harmonized 55 million melodies from users around the world. In 2018, I created Music Transformer, a breakthrough in generating music with long-term structure, and the first successful adaptation of the transformer architecture to music. Our ICLR paper is currently the most cited paper in music generation.

I was a Canada CIFAR AI Chair at Mila, and continue to hold an adjunct professorship at University of Montreal. I was a judge then organizer for AI Song Contest 2020-22. I did my PhD at Harvard University, master’s at the MIT Media Lab, and a dual bachelor’s at University of Southern California in music composition and CS.

Sponsors

University of California, Berkeley Department of Music

UC Berkeley Townsend Center for the Humanities

IASPM-US

Berkeley Center for New Media

Adobe

Berkeley Institute for Data Science Cultural Analytics Group

Organizers

Allison Jerzak

UC Berkeley

Allison Jerzak is a PhD Candidate at the University of California, Berkeley, where she studies the history of digital music and music recommendation. Allison is also a keyboardist and currently plays harpsichord and organ for the UC Berkeley’s Baroque Ensemble.

Ravi Krishnaswami

Brown University

Ravi Krishnaswami is a PHD candidate at Brown University researching AI and automation in music for media. He is a composer and sound-designer for advertising, television, and games, and co-founder of award-winning production company COPILOT Music + Sound. He plays guitar in The Smiths Tribute NYC and has studied sitar with Srinivas Reddy. He is the Valentine Visiting Assistant Professor of Music at Amherst College.